For each crit, two groups’ presentations were taken from Panopto recordings in class. I then fully anonymised the videos (e.g. removing mentions of students from slides) and stored in UAL’s password-protected one-drive. Each participant was given an ID number starting with A for the first group observed, and B for the second group observed.

An example video of group A’s second crit is shown below.

To help analyse the videos, I used a video annotation tool that can be found online (https://codetta.codes/ux-qual/). The tool does not store the video nor any data online (all data stays in UAL’s one-drive). It allows you to tag points throughout the video (right hand side panel) and then move these around as sticky notes (bottom panel). A screenshot example is shown below.

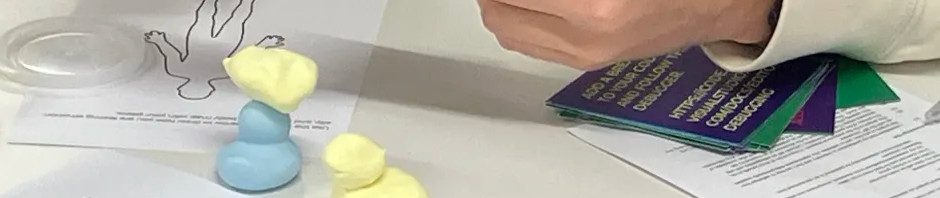

In addition, I would print out the observation sheets and score these for each of the groups. I would score and annotate the grid with any possible updates for the next iteration. An example for Group A Crit #2 is shown below. The full completed sheets can be found here.

A full table of the scores for the different groups throughout the crits is as follows:

| Group A | Crit #1 | Crit #2 | Crit #3 | Crit #4 |

| Characteristics | 2 | 1 | 4 | 4 |

| Lived Experience | 3 | 1 | 3 | 1 |

| Inspirations | . | 3 | 1 | 3 |

| Emotions | 1 | 2 | 1 | 1 |

| Colleagues | 2 | 2 | 4 | 1 |

| Assumptions | 1 | 2 | 3 | 4 |

| Power | 1 | 1 | 3 | 2 |

| Group B | Crit #1 | Crit #2 | Crit #3 | Crit #4 |

| Characteristics | 1 | 1 | 4 | 3 |

| Lived Experience | 3 | 1 | 1 | 1 |

| Inspirations | . | 2 | 1 | 3 |

| Emotions | 1 | 1 | 1 | 1 |

| Colleagues | 2 | 1 | 2 | 1 |

| Assumptions | 1 | 2 | 1 | 1 |

| Power | 1 | 1 | 3 | 3 |